COMPARING PARTITIONED COUPLING VS. MONOLITHIC BLOCK PRECONDITIONING FOR SOLVING STOKES-DARCY SYSTEMS: Difference between revisions

→Procedure: Picture insersted |

No edit summary |

||

| (15 intermediate revisions by 3 users not shown) | |||

| Line 5: | Line 5: | ||

== Problem Statement == | == Problem Statement == | ||

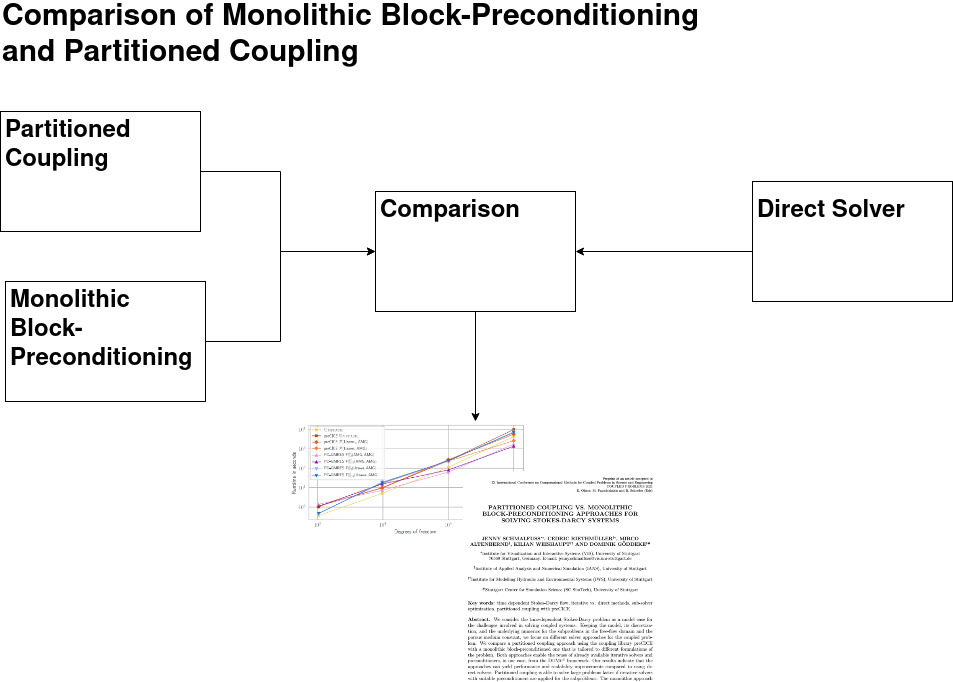

Instationary, coupled Stokes-Darcy two-domain problem: coupled systems of free flow adjacent to permeable (porous) media Two approaches (partioned coupling and monolitihic block preconditioning) are compared against each other and with the direct solving. | Instationary, coupled Stokes-Darcy two-domain problem: [[coupled systems]] of free flow adjacent to permeable (porous) media Two approaches ([[PARTITIONED_COUPLING_FOR_SOLVING_STOKES-DARCY_SYSTEMS | partioned coupling]] and [[MONOLITHIC_APPROACH_FOR_SOLVING_STOKES-DARCY_SYSTEMS | monolitihic block preconditioning]]) are compared against each other and with the direct solving. | ||

=== Object of Research and Objective === | === Object of Research and Objective === | ||

Analysis of the runtime and memory behavior of the two methods "partioned coupling" and "monolitihic block preconditioning" in comparison to the direct solver. The motivation for this is to avoid problems when using simple direct solvers (sparse direct solvers for the (linearized) subproblems): bad parallel scaling, untrustworthy solution with bad conditioning. | Analysis of the runtime and memory behavior of the two methods "[[PARTITIONED_COUPLING_FOR_SOLVING_STOKES-DARCY_SYSTEMS | partioned coupling]]" and "[[MONOLITHIC_APPROACH_FOR_SOLVING_STOKES-DARCY_SYSTEMS |monolitihic block preconditioning]]" in comparison to the direct solver. The motivation for this is to avoid problems when using simple direct solvers (sparse direct solvers for the (linearized) subproblems): bad parallel scaling, untrustworthy solution with bad conditioning. | ||

=== Procedure === | === Procedure === | ||

| Line 34: | Line 34: | ||

=== Variables === | === Variables === | ||

{| | {| class="wikitable" | ||

! Name | ! Name | ||

! Unit | ! Unit | ||

| Line 41: | Line 41: | ||

| Pressure (Dirichlet pressure) | | Pressure (Dirichlet pressure) | ||

| - | | - | ||

| | | <math>p</math> | ||

|- | |- | ||

| Velocity (Neumann velocity) | | Velocity (Neumann velocity) | ||

| - | | - | ||

| | | <math>v</math> | ||

|} | |} | ||

| Line 52: | Line 52: | ||

=== Process Steps === | === Process Steps === | ||

{| | {| class="wikitable" | ||

! Name | ! Name | ||

! Description | ! Description | ||

| Line 64: | Line 64: | ||

| Partioned Coupling Solving | | Partioned Coupling Solving | ||

| Numerical Solution of the Problem with Partioned Coupling | | Numerical Solution of the Problem with Partioned Coupling | ||

| LGS; | | LGS; <math>S^{ff}</math>: <math>v^{pm}*n</math>; <math>S^{pm}</math>: <math>p^{ff}</math> | ||

| LGS_solved_cp, runtime_cp | | LGS_solved_cp, runtime_cp | ||

| Solver (UMFPACK, PD-GMRS, Bi-CGSTAB), Preconditioner (Uzawa, AMG), Coupling (preCICE, Picard iteration; inverse least-squares interface quasi-Newton) | | Solver (UMFPACK, PD-GMRS, Bi-CGSTAB), Preconditioner (Uzawa, AMG), Coupling (preCICE, Picard iteration; inverse least-squares interface quasi-Newton) | ||

| Line 101: | Line 101: | ||

=== Applied Methods === | === Applied Methods === | ||

{| | {| class="wikitable" | ||

! ID | ! ID | ||

! Name | ! Name | ||

| Line 108: | Line 108: | ||

! implemented by | ! implemented by | ||

|- | |- | ||

| wikidata:Q7001954 | | wikidata:[[wikidata:Q7001954|Q7001954]] | ||

| Dirichlet-Neumann coupling | | Dirichlet-Neumann coupling | ||

| Coupling | | Coupling | ||

| Line 114: | Line 114: | ||

| | | | ||

|- | |- | ||

| wikidata:Q1683631 | | wikidata:[[wikidata:Q1683631|Q1683631]] | ||

| Picard iteration mit fixed-point iteration | | Picard iteration mit fixed-point iteration | ||

| Coupling | | Coupling | ||

| Line 120: | Line 120: | ||

| | | | ||

|- | |- | ||

| wikidata:Q25098909 | | wikidata:[[wikidata:Q25098909|Q25098909]] | ||

| inverse least-squares interface quasi-Newton | | inverse least-squares interface quasi-Newton | ||

| Coupling | | Coupling | ||

| Line 126: | Line 126: | ||

| | | | ||

|- | |- | ||

| wikidata:Q1069090 | | wikidata:[[wikidata:Q1069090|Q1069090]] | ||

| block-Gauss-Seidel method | | block-Gauss-Seidel method | ||

| Preconditioner | | Preconditioner | ||

| Line 132: | Line 132: | ||

| | | | ||

|- | |- | ||

| wikidata:Q2467290 | | wikidata:[[wikidata:Q2467290|Q2467290]] | ||

| Umfpack | | Umfpack | ||

| Solver | | Solver | ||

| Line 138: | Line 138: | ||

| | | | ||

|- | |- | ||

| wikidata:Q56564057 | | wikidata:[[wikidata:Q56564057|Q56564057]] | ||

| PD-GMRES | | PD-GMRES | ||

| Solver | | Solver | ||

| Line 144: | Line 144: | ||

| | | | ||

|- | |- | ||

| wikidata:Q56560244 | | wikidata:[[wikidata:Q56560244|Q56560244]] | ||

| Bi-CGSTAB | | Bi-CGSTAB | ||

| Solver | | Solver | ||

| Line 150: | Line 150: | ||

| | | | ||

|- | |- | ||

| wikidata:Q1471828 | | wikidata:[[wikidata:Q1471828|Q1471828]] | ||

| AMG method | | AMG method | ||

| Preconditioner | | Preconditioner | ||

| Line 156: | Line 156: | ||

| | | | ||

|- | |- | ||

| wikidata: Q17144437 | | wikidata: [[wikidata:Q17144437|Q17144437]] | ||

| Uzawa-iterations | | Uzawa-iterations | ||

| Preconditioner | | Preconditioner | ||

| Line 162: | Line 162: | ||

| | | | ||

|- | |- | ||

| wikidata:Q1654069 | | wikidata:[[wikidata:Q1654069|Q1654069]] | ||

| ILU(0) factorization | | ILU(0) factorization | ||

| Preconditioner | | Preconditioner | ||

| Line 171: | Line 171: | ||

=== Software used === | === Software used === | ||

{| | {| class="wikitable" | ||

! ID | ! ID | ||

! Name | ! Name | ||

| Line 179: | Line 179: | ||

! Dependencies | ! Dependencies | ||

! versioned | ! versioned | ||

! | ! Published | ||

! | ! Documented | ||

|- | |- | ||

| sw:8713 | | sw:[https://swmath.org/software/8713 8713] | ||

| preCICE | | preCICE | ||

| Library for coupling simulations | | Library for coupling simulations | ||

| Line 189: | Line 189: | ||

| Linux, Boost, MPI, ... | | Linux, Boost, MPI, ... | ||

| [https://github.com/precice/precice https://github.com/precice/precice] | | [https://github.com/precice/precice https://github.com/precice/precice] | ||

| doi | | https://doi.org/10.18419/darus-2125 | ||

| [https://precice.org/docs.html https://precice.org/docs.html] | | [https://precice.org/docs.html https://precice.org/docs.html] | ||

|- | |- | ||

| sw:14293 | | sw:[https://swmath.org/software/14293 14293] | ||

| DuMux | | DuMux | ||

| DUNE for Multi-{Phase, Component, Scale, Physics, …} flow and transport in porous media | | DUNE for Multi-{Phase, Component, Scale, Physics, …} flow and transport in porous media | ||

| Line 202: | Line 202: | ||

| [https://dumux.org/docs/ https://dumux.org/docs/] | | [https://dumux.org/docs/ https://dumux.org/docs/] | ||

|- | |- | ||

| sw:18749 | | sw:[https://swmath.org/software/18749 18749] | ||

| ISTL | | ISTL | ||

| Iterative Solver Template Library” (ISTL) which is part of the “Distributed and Unified Numerics Environment” (DUNE). | | Iterative Solver Template Library” (ISTL) which is part of the “Distributed and Unified Numerics Environment” (DUNE). | ||

| Line 209: | Line 209: | ||

| Linux, DuNE (C++ Framework) | | Linux, DuNE (C++ Framework) | ||

| [https://gitlab.dune-project.org/core/dune-istl https://gitlab.dune-project.org/core/dune-istl] | | [https://gitlab.dune-project.org/core/dune-istl https://gitlab.dune-project.org/core/dune-istl] | ||

| doi | | https://doi.org/10.1007/978-3-540-75755-9_82 | ||

| [https://www.dune-project.org/modules/dune-istl/ https://www.dune-project.org/modules/dune-istl/] | | [https://www.dune-project.org/modules/dune-istl/ https://www.dune-project.org/modules/dune-istl/] | ||

|} | |} | ||

| Line 215: | Line 215: | ||

=== Hardware === | === Hardware === | ||

{| | {| class="wikitable" | ||

! ID | ! ID | ||

! Name | ! Name | ||

| Line 233: | Line 233: | ||

=== Input Data === | === Input Data === | ||

{| | {| class="wikitable" | ||

! ID | ! ID | ||

! Name | ! Name | ||

| Line 240: | Line 240: | ||

! Format Representation | ! Format Representation | ||

! Format Exchange | ! Format Exchange | ||

! | ! Binary/Text | ||

! | ! Proprietary | ||

! | ! To Publish | ||

! | ! To Archive | ||

|- | |- | ||

| | | | ||

| LGS | | LGS | ||

| O( | | O(<math>2*10^6</math>) Matrix size | ||

| Data structure in DUNE/DuMux | | Data structure in DUNE/DuMux | ||

| | | | ||

| Line 259: | Line 259: | ||

=== Output Data === | === Output Data === | ||

{| | {| class="wikitable" | ||

! ID | ! ID | ||

! Name | ! Name | ||

| Line 266: | Line 266: | ||

! Format Representation | ! Format Representation | ||

! Format Exchange | ! Format Exchange | ||

! | ! Binary/Text | ||

! | ! Proprietary | ||

! | ! To Publish | ||

! | ! To Archive | ||

|- | |- | ||

| | | | ||

| Line 293: | Line 293: | ||

| ? | | ? | ||

|- | |- | ||

| arxiv:2108.13229 | | arxiv:[https://arxiv.org/pdf/2108.13229.pdf 2108.13229] | ||

| Paper | | Paper | ||

| O(KB) | | O(KB) | ||

| Line 309: | Line 309: | ||

=== Mathematical Reproducibility === | === Mathematical Reproducibility === | ||

Yes, by all parameters | |||

=== Runtime Reproducibility === | === Runtime Reproducibility === | ||

Yes, for same input samples | |||

=== Reproducibility of Results === | === Reproducibility of Results === | ||

| Line 342: | Line 342: | ||

wikidata: [https://www.wikidata.org/wiki/ https://www.wikidata.org/wiki/] | wikidata: [https://www.wikidata.org/wiki/ https://www.wikidata.org/wiki/] | ||

[[Category:Workflow]] | |||

Latest revision as of 16:19, 15 November 2022

COMPARING PARTITIONED COUPLING VS. MONOLITHIC BLOCK PRECONDITIONING FOR SOLVING STOKES-DARCY SYSTEMS

PID (if applicable): arxiv:2108.13229

Problem Statement

Instationary, coupled Stokes-Darcy two-domain problem: coupled systems of free flow adjacent to permeable (porous) media Two approaches ( partioned coupling and monolitihic block preconditioning) are compared against each other and with the direct solving.

Object of Research and Objective

Analysis of the runtime and memory behavior of the two methods " partioned coupling" and "monolitihic block preconditioning" in comparison to the direct solver. The motivation for this is to avoid problems when using simple direct solvers (sparse direct solvers for the (linearized) subproblems): bad parallel scaling, untrustworthy solution with bad conditioning.

Procedure

Perform three types of solving a Darcy-Stokes system, namely Partioned Coupling, Monolithic Block Preconditioning, and Direct Solving, with subsequent comparison of runtime and memory behavior.

Involved Disciplines

Environmental Systems, Mathematics

Data Streams

Model

Stokes flow in the free-flow domain Darcy’s law for the porous domain

Discretization

- Time: first-order backward Euler scheme

- Space: finite volumes

- Porous Domain (Darcy): mit two-point flux approximation for pressure

- Free Flow domain (Stokes): staggered grid for pressure and velocity, upwind scheme for approximation of fluxes

Variables

| Name | Unit | Symbol |

|---|---|---|

| Pressure (Dirichlet pressure) | - | |

| Velocity (Neumann velocity) | - |

Process Informationen

Process Steps

Applied Methods

| ID | Name | Process Step | Parameter | implemented by |

|---|---|---|---|---|

| wikidata:Q7001954 | Dirichlet-Neumann coupling | Coupling | ||

| wikidata:Q1683631 | Picard iteration mit fixed-point iteration | Coupling | ||

| wikidata:Q25098909 | inverse least-squares interface quasi-Newton | Coupling | ||

| wikidata:Q1069090 | block-Gauss-Seidel method | Preconditioner | ||

| wikidata:Q2467290 | Umfpack | Solver | ||

| wikidata:Q56564057 | PD-GMRES | Solver | k (subiteration parameter, determined automatically), tolerance: relative residual... | |

| wikidata:Q56560244 | Bi-CGSTAB | Solver | tolerance: relative residual... | |

| wikidata:Q1471828 | AMG method | Preconditioner | ||

| wikidata: Q17144437 | Uzawa-iterations | Preconditioner | ||

| wikidata:Q1654069 | ILU(0) factorization | Preconditioner |

Software used

| ID | Name | Description | Version | Programming Language | Dependencies | versioned | Published | Documented |

|---|---|---|---|---|---|---|---|---|

| sw:8713 | preCICE | Library for coupling simulations | v2104.0 | Core library in C++; Bindings for Fortran, Python, C, Matlab; Adaptors dependant on simulation code (Fortran, Python, C, Matlab) | Linux, Boost, MPI, ... | https://github.com/precice/precice | https://doi.org/10.18419/darus-2125 | https://precice.org/docs.html |

| sw:14293 | DuMux | DUNE for Multi-{Phase, Component, Scale, Physics, …} flow and transport in porous media | C++, python-bindings, utility-skripts in python | Linux, DuNE (C++ Framework), cmake (module chains), package-config, compiler, build-essentials, dpg | https://git.iws.uni-stuttgart.de/dumux-repositories/dumux | https://zenodo.org/record/5152939#.YQva944zY2w | https://dumux.org/docs/ | |

| sw:18749 | ISTL | Iterative Solver Template Library” (ISTL) which is part of the “Distributed and Unified Numerics Environment” (DUNE). | C++ | Linux, DuNE (C++ Framework) | https://gitlab.dune-project.org/core/dune-istl | https://doi.org/10.1007/978-3-540-75755-9_82 | https://www.dune-project.org/modules/dune-istl/ |

Hardware

| ID | Name | Processor | Compiler | #Nodes | #Cores |

|---|---|---|---|---|---|

| AMD EPYC 7551P CPU | 1 | 1 |

Input Data

| ID | Name | Size | Data Structure | Format Representation | Format Exchange | Binary/Text | Proprietary | To Publish | To Archive |

|---|---|---|---|---|---|---|---|---|---|

| LGS | O() Matrix size | Data structure in DUNE/DuMux | numbers | open | ? | ? |

Output Data

| ID | Name | Size | Data Structure | Format Representation | Format Exchange | Binary/Text | Proprietary | To Publish | To Archive |

|---|---|---|---|---|---|---|---|---|---|

| Runtime comparison of the direct solver Umfpack and iterative solvers with partitioned coupling and block preconditioning | small | statistics | numbers | ? | ? | ||||

| Runtime comparison of the best performing solver configurations | small | statistics | numbers | ? | ? | ||||

| arxiv:2108.13229 | Paper | O(KB) | text | open | yes | yes |

Reproducibility

Mathematical Reproducibility

Yes, by all parameters

Runtime Reproducibility

Yes, for same input samples

Reproducibility of Results

Due to floating point arithmetic, no bitwise reproducibility

Reproducibility on original Hardware

Reproducibility on other Hardware

a) Serial Computation

b) Parallel Computation

Transferability to

a) similar model parameters (other initial and boundary values)

b) other models

Legend

The following abbreviations are used in the document to indicate/resolve IDs:

doi: DOI / https://dx.doi.org/

sw: swMATH / https://swmath.org/software/

wikidata: https://www.wikidata.org/wiki/